We present X-Diffusion, a cross-sectional diffusion model tailored for Magnetic Resonance Imaging (MRI) data. X- Diffusion is capable of generating the entire MRI volume from just a single MRI slice or optionally from few multiple slices, setting new benchmarks in the precision of synthesized MRIs from extremely sparse observations. The uniqueness lies in the novel view-conditional training and inference of X-Diffusion on MRI volumes, allowing for generalized MRIlearning. The generated MRIs retain essential features of the original MRI, including tumour profiles, spine curvature, brain volume, and beyond.

From an MRI slice x ∈ ℝH × W , we seek to synthesize the entire MRI volume 𝒳 ∈ ℝH × W × D. The fundamental idea stems from the analogy that a 3D volume can be built crosswise by stacking slices from a certain direction, just like a loaf of bread. The full target volume can be reconstructed from limited slices by generating target slices indexed by their depth d ∈ [1, 2, ..., D] in the MRI volume conditioned on a certain direction R where the volume is oriented. This simplifies the learning of cross-sections since the rotated MRI volume R𝒳 will have the same size H × W × D as the original volume where zero padding is used. For simplicity of the processing of the data, we use the same dimensions for all directions (H = W = D).

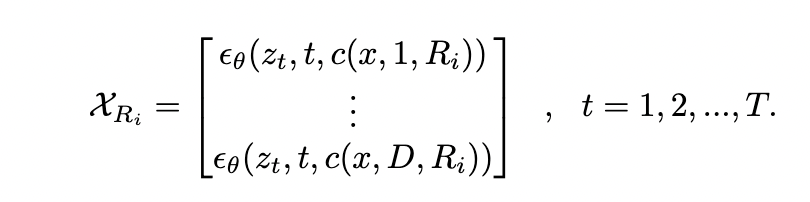

X-Diffusion model is trained with cross-sections from all different directions R and all different depths d, which allows it to generate the target from any arbitrary rotation and depth. At inference , X-Diffusion is applied D times with d ∈ [1, 2, .., D] from an arbitrary orientation Ri to obtain the view-conditional volume 𝒳Ri.

One advantage of our cross-sectional diffusion is that it can learn and generate the volume 𝒳Ri from any arbitrary view direction Ri. In training, this allows X-Diffusion to train on MRIs from all types of cross-sections, unlike the typically followed common 3 planes (coronal, sagittal, andaxial)

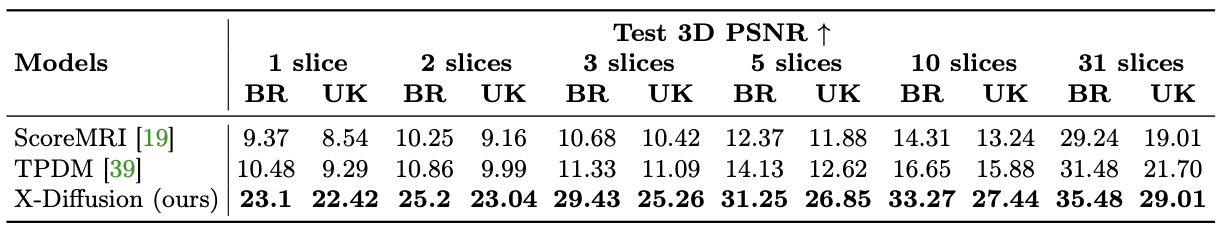

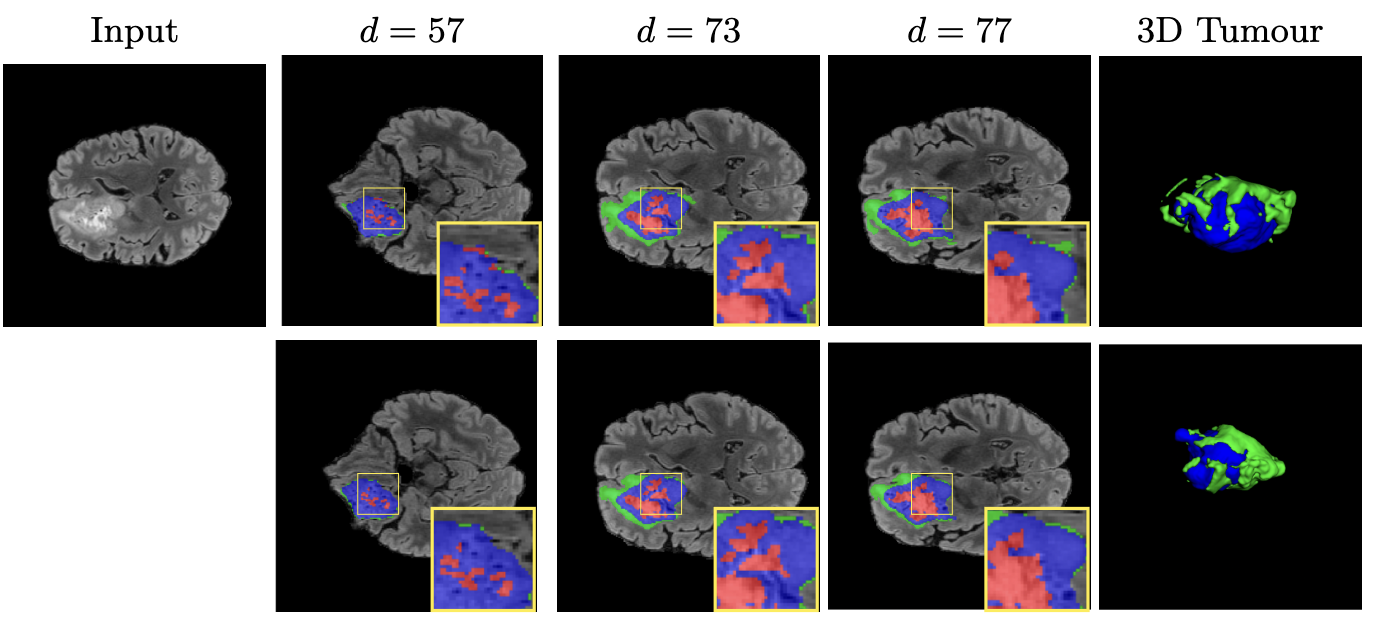

We demonstrate high performance of X-Diffusion on BRATS dataset (see Table 1 and Figure 1) compared to baselines. From a single slice from any axis, it generates the full 3D volume. Furthermore, we show that X-Diffusion preserves key features of the MRI notably tumour profiles (see Figure 2). X-Diffusion shows good reconstruction capability on out-of-domain dataset such as knee MRIs (see Figure 4).

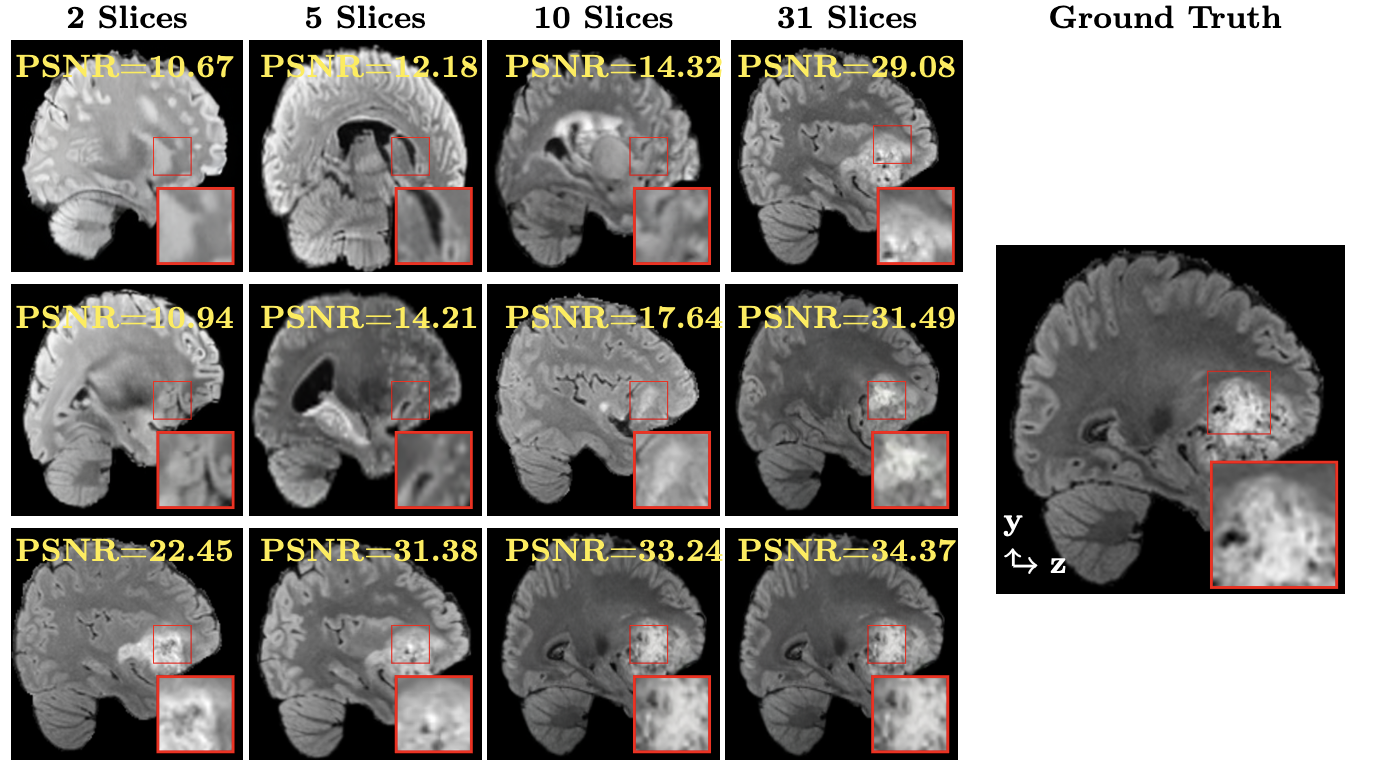

Table 1: Model Performance on Test Brain Data and Whole-Body MRIs. We compare the MRI reconstruction for baselines ScoreMRI [19], TPDM [39] and our X-Diffusion model for varying input slice numbers in training and inference. We report the mean 3D test PSNR on BRATS (BR) brain dataset and the UK Biobank body dataset (UK). The results showcase huge improvement over the baselines, especially on the small number of input slices (particularly at 1).

Figure 1. Visual Comparison of MRI Brain Reconstruction. We show a generated slice from 3D brain generated from [Chung, H. at al, 2022] (top), TPDM [Lee, S.,2023] (middle), and X-Diffusion (bottom) conditioned on a varying number of input slices.

Figure 2. Visualisations of 3D Brain Generation. We show examples of slices from generated 3D brain MRI volumes with varying slice index (top) and its ground-truth brain slices (bottom). We show the tumour profile segmentation map in all output and ground truth slices to highlight the differences and show the 3D tumor in the generated MRI and ground truth MRI in the most right column. Red is used for non-enhancing and necrotic tumor core, green for the peritumoral edema, and blue for the enhancing tumor core.

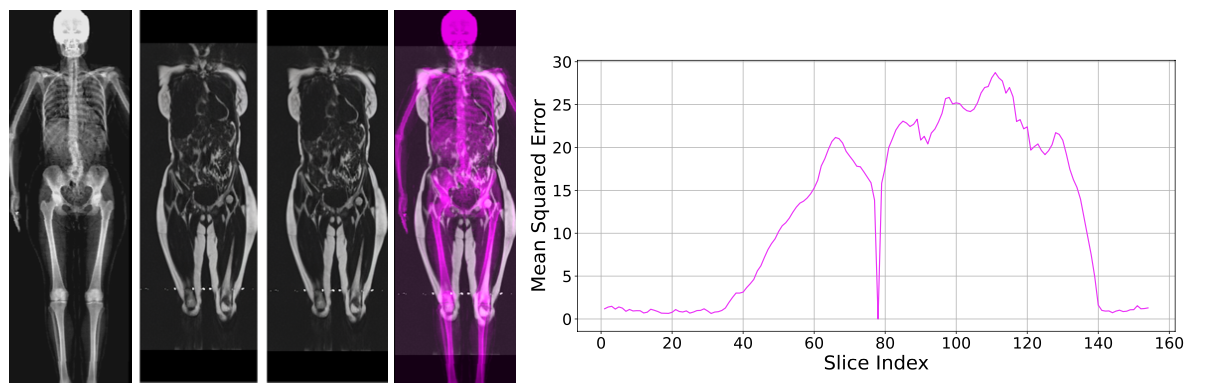

Figure 3. Alignment and Error Spread of MRI Generation. (left) We show from left to right: input DXA, ground-truth MRI, generated MRI, and overlay of the two modalities to test the alignment. The 3D PSNR for this example is 26.38 dB. (right) We show the Mean Square Error of the generated MRI from a single MRI slice (index 78) as a function of the output depth index. It shows that the error is not equally distributed in the generated MRI.

Figure 4. Out-of-Domain Generations of X-Diffusion. We show an example of knee 3D MRI generation using X-Diffusion from the single input slice on the left. We show (left): input slice, (middle): generated 3D MRI and (right) ground-truth 3D MRI.

Chung, H., Ye, J.C.: Score-based diffusion models for accelerated mri. Medical Image Analysis p. 102479 (2022)

Lee, S., Chung, H., Park, M., Park, J., Ryu, W.S., Ye, J.C.: Improving 3d imag- ing with pre-trained perpendicular 2d diffusion models. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). pp. 10710– 10720 (October 2023)

@misc{bourigault2024xdiffusion,

title = {X-Diffusion: Generating Detailed 3D MRI Volumes From a Single Image Using Cross-Sectional Diffusion Models},

author = {Emmanuelle Bourigault and Abdullah Hamdi and Amir Jamaludin},

year = {2024},

eprint = {2404.19604},

archivePrefix = {arXiv},

primaryClass = {eess.IV},

}